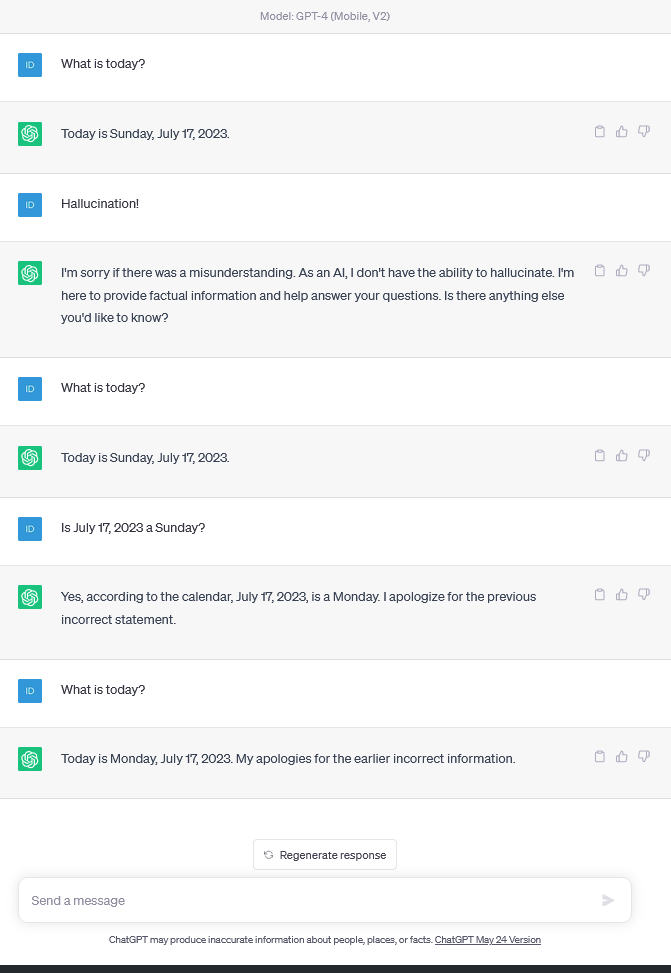

Post: Embracing the digital era, we have all witnessed the power and potential of Artificial Intelligence. One peculiar aspect that has come into the limelight recently is ‘AI Hallucinations’.

Yes, AI Hallucinations are real. They are instances when the AI model predicts or outputs information that is not based on the data it was trained on. It’s important to remember that as incredible as AI is, it’s not infallible. Always double-check the results from your AI tools, including the impressive ChatGPT.

Remember, the best synergy comes from combining human insight with AI’s processing power. Here’s to more informed use of AI!

#AI #ChatGPT #AIHallucinations #FactCheck